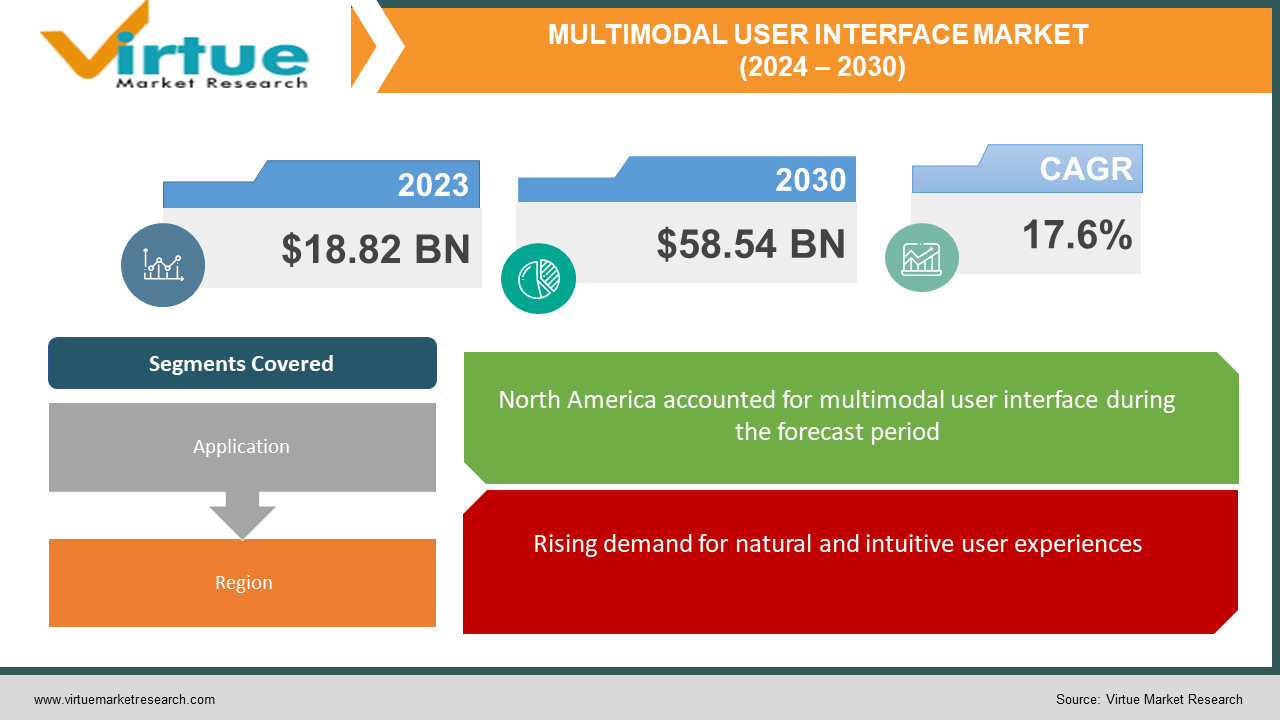

Multimodal user interface Market Size (2024 – 2030)

Global Multimodal UI Market Size was valued at USD 18.82 billion in 2023. The Multimodal UI Market industry is projected to grow USD 58.54 billion by 2030, exhibiting a compound annual growth rate (CAGR) of 17.6% during 2024-2030.

The multimodal user interface (MMUI) market is booming, estimated at around $17.56 billion in 2023, and expected to grow rapidly due to the rising popularity of smart devices and the demand for natural, intuitive interactions. Think smartphones with voice assistants, smart speakers controlled by gestures, and augmented reality experiences blending physical and digital worlds. Fueled by advancements in AI like natural language processing and computer vision, MMUI is making technology more accessible and user-friendly for everyone, paving the way for a future where interacting with machines feels as natural as talking to another person.

Key Market Insights:

The multimodal UI market is booming, expected to reach a staggering $62.93 billion by 2032. This surge is driven by our desire for natural interactions, the explosion of smart devices, and a growing focus on accessibility. Imagine effortlessly controlling your home with voice commands, or seamlessly switching between touch and gestures on your phone. AI and personalization are further fueling this transformation, making interactions intuitive and tailored to individual needs. However, challenges remain, such as the need for robust data security and seamless compatibility across devices. Despite these hurdles, the multimodal UI market is poised to revolutionize the way we experience technology, making it more natural, and accessible.

Multimodal User Interface Market Drivers:

Rising demand for natural and intuitive user experiences

People are increasingly looking for ways to interact with technology in a more natural and intuitive way, just like they interact with the world around them. Multimodal UIs allow users to interact with devices using a combination of voice, touch, gestures, and even eye movement, making the interaction more natural and enjoyable.

Advancement of AI technologies

Artificial intelligence (AI) is playing a key role in improving the accuracy and efficiency of multimodal interactions. For example, natural language processing (NLP) is used to understand voice commands, while computer vision is used to track hand and eye movements. As AI technologies continue to advance, we can expect to see even more sophisticated and user-friendly multimodal UIs emerge.

Multimodal UIs can make technology more accessible to people with disabilities, as they provide alternative ways to interact with devices

Multimodal UIs are unlocking technology's potential for everyone, particularly those with disabilities. Imagine using voice commands instead of typing if you have difficulty gripping a mouse, or controlling a smart home with hand gestures if mobility is limited. These are just a few examples of how MMUIs offer alternative interaction methods. Voice commands can be a lifeline for those who struggle with traditional input, while gesture control empowers individuals with limited mobility. Even eye movements can be used to control interfaces, opening doors for people with various motor skill limitations. As inclusivity becomes a top priority, the demand for MMUIs is soaring, paving the way for a future where technology is accessible and empowering for all.

Multimodal user interface Market challenges and restraints:

Training effective AI models for multimodal interactions requires massive amounts of high-quality data, raising concerns about user privacy and potential biases

The massive data appetite of multimodal AI presents a double-edged sword. On one hand, it fuels the development of intelligent systems that understand and respond to a symphony of human cues – voice, touch, gaze, gestures. Imagine seamlessly controlling your smart home with a spoken request and a confirming hand gesture, or effortlessly navigating virtual worlds through intuitive body movements. This potential for natural, intuitive interactions is exciting. However, the vast data required raises significant concerns about user privacy. Collecting, storing, and processing such intimate details carries inherent risks. How do we ensure this data is used responsibly, with user consent and robust security measures in place? The threat of data breaches or unauthorized access looms large, potentially exposing sensitive information and compromising user trust.

Developing and integrating sophisticated multimodal UIs can be complex and expensive, requiring specialized expertise and resources

The allure of intuitive, multi-layered interactions comes at a cost. Crafting these sophisticated experiences isn't child's play. Imagine weaving together voice commands, touch interactions, and even eye movements - it's like building a symphony, requiring specialized instruments and maestros. Firstly, the sheer complexity demands expertise. You need engineers adept at AI, natural language processing, and even gesture recognition, all working in concert. It's not just about coding buttons, but understanding the nuances of human behavior and translating them into seamless digital responses. Secondly, cost becomes a hurdle. Integrating these diverse modalities requires powerful hardware and software, pushing the boundaries of what's readily available. Building custom solutions from scratch further inflates the bill, making widespread adoption a challenge.

Processing multimodal inputs in real-time can be computationally intensive, requiring powerful devices that may not be universally accessible

The dream of seamlessly interacting with technology through a chorus of voice, touch, and gestures faces a hidden challenge: processing power. Imagine speaking, gesturing, and receiving immediate visual feedback simultaneously - it's a computational juggling act! Unfortunately, not all devices can handle this demanding performance. The complexity of multimodal processing stems from analyzing multiple inputs concurrently. Each modality - voice, touch, gaze - generates its own data stream, demanding real-time interpretation and response. This requires powerful processors and graphics cards, often found in high-end devices. The average smartphone or laptop might struggle to keep up, leading to lags, delays, and frustratingly broken interactions. This creates a barrier to accessibility. If cutting-edge hardware is the gateway to truly immersive multimodal experiences, then a significant portion of users might be left on the sidelines. Imagine someone relying on older technology being unable to enjoy the ease of voice commands or the intuitiveness of gesture controls.

Market Opportunities:

The multimodal UI (MMUI) market brims with exciting opportunities, fueled by the convergence of rising user demand, technological advancements, and growing accessibility concerns. Firstly, the desire for natural interactions is propelling MMUI adoption. Imagine seamlessly controlling your car with voice commands or effortlessly navigating virtual worlds with gestures. This intuitiveness fosters user engagement and satisfaction, creating a market ripe for innovative MMUI solutions in various sectors like automotive, gaming, and AR/VR. Secondly, AI advancements are empowering MMUIs with unparalleled accuracy and efficiency. Imagine AI-powered MMUIs that flawlessly understand natural language or precisely interpret intricate hand gestures. This opens doors for personalized experiences, tailored assistance, and even emotion recognition, creating vast commercial potential in areas like healthcare, education, and customer service. Finally, inclusivity is shaping the MMUI landscape. MMUIs offer alternative interaction methods, like voice control for those with dexterity challenges or eye tracking for individuals with motor limitations. This not only expands the user base but also aligns with the growing focus on ethical technology, creating a lucrative market for accessible MMUI solutions across diverse industries. In conclusion, the MMUI market presents a compelling opportunity for businesses to create user-centric, AI-powered, and inclusive interfaces, shaping the future of how we interact with technology. This is not just a market to watch, but one to actively participate in and shape.

MULTIMODAL USER INTERFACE MARKET REPORT COVERAGE:

|

REPORT METRIC |

DETAILS |

|

Market Size Available |

2023 - 2030 |

|

Base Year |

2023 |

|

Forecast Period |

2024 - 2030 |

|

CAGR |

17.6% |

|

Segments Covered |

By Application, and Region |

|

Various Analyses Covered |

Global, Regional & Country Level Analysis, Segment-Level Analysis, DROC, PESTLE Analysis, Porter’s Five Forces Analysis, Competitive Landscape, Analyst Overview on Investment Opportunities |

|

Regional Scope |

North America, Europe, APAC, Latin America, Middle East & Africa |

|

Key Companies Profiled |

Google, Apple, Microsoft, OpenAI, Immersive Touch |

Multimodal user interface Market segmentation - By Application

-

Smart homes

-

Automotive

-

Gaming

-

Manufacturing and Robotics

-

Virtual and Augmented Reality

From smart homes controlled by voice and gestures to immersive VR experiences enhanced by hand tracking, multimodal UI is transforming diverse sectors. Smart homes and automotive currently hold the lead, with voice commands, touchscreens, and gestures streamlining daily tasks and in-car interactions. Gaming thrives on the deeper engagement created by combining voice, gesture, and haptic feedback, while VR/AR leverages natural interactions like hand tracking for richer experiences. In manufacturing and robotics, hands-free operation via voice and gestures improves efficiency and safety. However, sectors like healthcare and education are still emerging in this space, highlighting the vast potential for further growth and impact across various industries

Multimodal user interface Market Segmentation - Regional Analysis

-

North America

-

Asia-Pacific

-

Europe

-

South America

-

Middle East and Africa

North America has the largest market share having strong tech infrastructure, early adoption of smart devices, and significant R&D investments. Europe is expected to experience steady growth due to government initiatives and strong tech presence. Asia Pacific is the fastest growing region, rapidly growing smartphone and smart device penetration. Africa is slowest growing region, limited access to smart devices and internet connectivity in Africa and middle east is region for slow growth. Latin America and middle east is showing moderate growth due to increasing smartphone penetration and rising tech investments.

COVID-19 Impact Analysis on the Multimodal user interface Market

The COVID-19 pandemic delivered a mixed bag for the multimodal UI (MMUI) market. While initial disruptions and economic downturns slowed growth, the pandemic also accelerated trends favoring MMUIs. The surge in remote work and online interactions fueled demand for touchless interfaces like voice commands and gesture control, boosting MMUI adoption in areas like smart homes, video conferencing, and AR/VR collaboration tools. Additionally, heightened hygiene concerns led to increased interest in voice-controlled devices and wearables, further propelling the market. However, supply chain disruptions and budget constraints did pose challenges, impacting production and delaying certain projects. Overall, the pandemic's impact on the MMUI market seems to be creating a net positive, accelerating the shift towards natural and touchless interactions, with long-term growth potential likely exceeding pre-pandemic forecasts.

Latest trends/Developments

The multimodal UI (MMUI) market is buzzing with exciting advancements! The focus is shifting towards embodied interactions, seamlessly integrating voice, touch, gestures, and even brain-computer interfaces for a truly natural experience. Think controlling AR objects with hand and eye movements, or using facial expressions to navigate virtual worlds. AI integration is getting deeper, with natural language processing (NLP) understanding complex instructions and sentiment, while computer vision tracks even subtle body language cues. Accessibility is a top priority, with MMUIs incorporating features like eye tracking for individuals with limited mobility and voice control for those with dexterity challenges. The automotive industry is a hotbed of innovation, with gesture-controlled infotainment systems and voice-activated navigation becoming the norm. Augmented reality (AR) and virtual reality (VR) are seeing a rise in MMUIs, blurring the lines between physical and digital worlds with intuitive interactions. And lastly, haptic feedback is adding a new dimension to MMUIs, providing realistic touch sensations that enhance immersion and user engagement. Buckle up, the future of how we interact with technology is looking increasingly multimodal and fascinating

Key Players:

-

Google

-

Apple

-

Microsoft

-

OpenAI

-

Immersive Touch

Chapter 1. MULTIMODAL USER INTERFACE MARKET – Scope & Methodology

1.1 Market Segmentation

1.2 Scope, Assumptions & Limitations

1.3 Research Methodology

1.4 Primary Sources

1.5 Secondary Sources

Chapter 2. MULTIMODAL USER INTERFACE MARKET – Executive Summary

2.1 Market Size & Forecast – (2024 – 2030) ($M/$Bn)

2.2 Key Trends & Insights

2.2.1 Demand Side

2.2.2 Supply Side

2.3 Attractive Investment Propositions

2.4 COVID-19 Impact Analysis

Chapter 3. MULTIMODAL USER INTERFACE MARKET – Competition Scenario

3.1 Market Share Analysis & Company Benchmarking

3.2 Competitive Strategy & Development Scenario

3.3 Competitive Pricing Analysis

3.4 Supplier-Distributor Analysis

Chapter 4. MULTIMODAL USER INTERFACE MARKET Entry Scenario

4.1 Regulatory Scenario

4.2 Case Studies – Key Start-ups

4.3 Customer Analysis

4.4 PESTLE Analysis

4.5 Porters Five Force Model

4.5.1 Bargaining Power of Suppliers

4.5.2 Bargaining Powers of Customers

4.5.3 Threat of New Entrants

4.5.4 Rivalry among Existing Players

4.5.5 Threat of Substitutes

Chapter 5. MULTIMODAL USER INTERFACE MARKET – Landscape

5.1 Value Chain Analysis – Key Stakeholders Impact Analysis

5.2 Market Drivers

5.3 Market Restraints/Challenges

5.4 Market Opportunities

Chapter 6. MULTIMODAL USER INTERFACE MARKET – By application

6.1 Introduction/Key Findings

6.2 Smart homes

6.3 Automotive

6.4 Gaming

6.5 Manufacturing and Robotics

6.6 Virtual and Augmented Reality

6.7 Y-O-Y Growth trend Analysis By application

6.8 Absolute $ Opportunity Analysis By application, 2024-2030

Chapter 7. MULTIMODAL USER INTERFACE MARKET , By Geography – Market Size, Forecast, Trends & Insights

7.1 North America

7.1.1 By Country

7.1.1.1 U.S.A.

7.1.1.2 Canada

7.1.1.3 Mexico

7.1.2 By application

7.1.3 Countries & Segments - Market Attractiveness Analysis

7.2 Europe

7.2.1 By Country

7.2.1.1 U.K

7.2.1.2 Germany

7.2.1.3 France

7.2.1.4 Italy

7.2.1.5 Spain

7.2.1.6 Rest of Europe

7.2.2 By application

7.2.3 Countries & Segments - Market Attractiveness Analysis

7.3 Asia Pacific

7.3.1 By Country

7.3.1.1 China

7.3.1.2 Japan

7.3.1.3 South Korea

7.3.1.4 India

7.3.1.5 Australia & New Zealand

7.3.1.6 Rest of Asia-Pacific

7.3.2 By application

7.3.3 Countries & Segments - Market Attractiveness Analysis

7.4 South America

7.4.1.1 Brazil

7.4.1.2 Argentina

7.4.1.3 Colombia

7.4.1.4 Chile

7.4.1.5 Rest of South America

7.4.2 By application

7.4.3 Countries & Segments - Market Attractiveness Analysis

7.5 Middle East & Africa

7.5.1 By Country

7.5.1.1 United Arab Emirates (UAE)

7.5.1.2 Saudi Arabia

7.5.1.3 Qatar

7.5.1.4 Israel

7.5.1.5 South Africa

7.5.1.6 Nigeria

7.5.1.7 Kenya

7.5.1.8 Egypt

7.5.1.9 Rest of MEA

7.5.2 By application

7.5.3 Countries & Segments - Market Attractiveness Analysis

Chapter 8. MULTIMODAL USER INTERFACE MARKET – Company Profiles – (Overview, Product Portfolio, Financials, Strategies & Developments)

8.1 Google

8.2 Apple

8.3 Microsoft

8.4 OpenAI

8.5 Immersive Touch

Download Sample

Choose License Type

2500

4250

5250

6900

Frequently Asked Questions

Global Multimodal UI Market Size was valued at USD 18.82 billion in 2023. The Multimodal UI Market industry is projected to grow from USD 18.82 billion to USD 58.54 billion by 2030, exhibiting a compound annual growth rate (CAGR) of 17.6% during 2024-2030.

Rising demand for natural and intuitive user experiences, Advancement of AI technologies, Multimodal UIs can make technology more accessible to people with disabilities, as they provide alternative ways to interact with devices these are the reasons which is driving the market.

Based on application it is divided into five segments - Smart homes, Automotive, Gaming, Virtual and Augmented Reality, Manufacturing and Robotics

Asia-pacific is the most dominant region for Multimodal user interface Market.

Google, Apple, Microsoft, OpenAI, Immersive Touch